Complete Guide

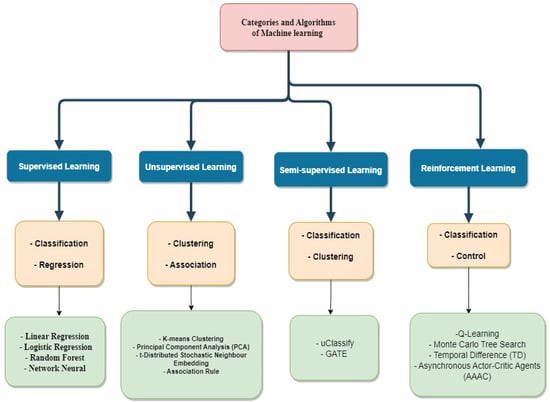

Embarking on a machine learning project requires more than just a brilliant idea; it demands selecting the best machine learning frameworks that align perfectly with your specific problem type. In the rapidly evolving landscape of artificial intelligence, the choice of framework can significantly impact a project's development efficiency, performance, and scalability. This comprehensive guide, crafted by an SEO expert with a deep understanding of data science and AI, will navigate you through the diverse world of ML frameworks, helping you make informed decisions for everything from intricate computer vision tasks to robust natural language processing applications. We'll explore how different frameworks excel across various challenges, providing you with the insights needed to power your next innovation in predictive analytics and beyond.

Understanding the Landscape of Machine Learning Frameworks

The ecosystem of machine learning frameworks is rich and varied, each designed with unique strengths and target use cases. While some are general-purpose powerhouses, others are highly specialized tools. Understanding their core philosophies and capabilities is the first step toward successful model training and deployment.

The Core Pillars: TensorFlow and PyTorch

When discussing modern machine learning, especially deep learning and complex neural networks, two names invariably dominate the conversation: TensorFlow and PyTorch. Both are open-source Python libraries that provide extensive functionalities for building and training sophisticated models, yet they cater to slightly different preferences and project needs.

- TensorFlow: Scalability and Production Readiness

Developed by Google, TensorFlow is renowned for its robust production capabilities and scalability. It's an excellent choice for large-scale deployments, especially in enterprise environments where cross-platform compatibility and efficient resource utilization are paramount. Its architecture allows for deployment on various devices, from mobile and edge devices to large-scale distributed systems. TensorFlow's graph-based execution, while sometimes perceived as less intuitive for dynamic experimentation, offers significant advantages in optimization and deployment efficiency. It boasts a comprehensive ecosystem, including TensorFlow Lite for mobile/IoT, TensorFlow.js for web, and TensorFlow Extended (TFX) for full-lifecycle ML operations (MLOps).

- Use Case Strengths: Large-scale production systems, distributed training, mobile and edge device deployment, complex model architectures.

- Actionable Tip: If your project requires seamless transition from research to production or demands high scalability, TensorFlow's mature MLOps ecosystem and deployment flexibility make it a strong contender. Consider its Keras API for easier model building.

- PyTorch: Flexibility and Research Agility

Backed by Facebook's AI Research (FAIR) lab, PyTorch has rapidly gained popularity, particularly within the research community, due to its intuitive, Python-centric API and dynamic computation graph. This "define-by-run" approach makes debugging and experimentation significantly easier, allowing researchers and developers to iterate quickly on new ideas and complex neural network designs. Its flexibility makes it highly adaptable for cutting-edge research and rapid prototyping.

- Use Case Strengths: Academic research, rapid prototyping, dynamic model architectures, projects requiring high flexibility and ease of debugging.

- Actionable Tip: For projects where rapid iteration, complex custom models, or a highly intuitive Pythonic interface are priorities, PyTorch offers unparalleled agility. It's particularly favored for developing novel deep learning models.

Scikit-learn: The Go-To for Traditional ML

For a vast array of common machine learning tasks that don't necessarily require deep neural networks, Scikit-learn stands out as an indispensable tool. This Python library provides a simple, efficient, and comprehensive suite of tools for predictive analytics, including classification, regression, clustering, dimensionality reduction, model selection, and preprocessing. It's built on NumPy, SciPy, and Matplotlib, integrating seamlessly with the Python scientific stack. Scikit-learn is the workhorse for most projects involving structured or tabular data.

- Use Case Strengths: Classification, regression, clustering, dimensionality reduction, traditional machine learning models (e.g., SVMs, Random Forests, K-Means), feature engineering, data preprocessing.

- Actionable Tip: Before jumping into deep learning, always consider if Scikit-learn can solve your problem. For most tabular data problems, its algorithms often provide excellent performance with less computational overhead and complexity compared to deep learning frameworks.

Specialized Frameworks for Specific Challenges

Beyond the general-purpose giants, several specialized frameworks and libraries excel in niche areas or offer high-level abstractions.

- XGBoost and LightGBM: Gradient Boosting Powerhouses

When it comes to winning machine learning competitions and delivering top performance on tabular data, gradient boosting libraries like XGBoost and LightGBM are often the algorithms of choice. These optimized implementations of gradient boosting machines provide exceptional speed and accuracy for classification and regression tasks. They handle missing values, outliers, and non-linear relationships with remarkable efficiency.

- Use Case Strengths: High-performance classification and regression on structured data, Kaggle competitions, fraud detection, customer churn prediction.

- Actionable Tip: For highly accurate predictions on structured datasets, especially those with many features, integrate XGBoost or LightGBM into your workflow. They often outperform simpler models and can be combined with Scikit-learn for preprocessing.

- Keras: High-Level API for Rapid Prototyping

While often used as a high-level API for TensorFlow, Keras can also run on top of PyTorch and JAX. It's designed for fast experimentation with deep neural networks, focusing on user-friendliness and modularity. Keras simplifies the process of building, training, and evaluating deep learning models, making it an excellent entry point for beginners and a productivity booster for experienced practitioners.

- Use Case Strengths: Rapid prototyping of deep learning models, educational purposes, projects where quick iteration on model architectures is key.

- Actionable Tip: If you find TensorFlow or PyTorch's lower-level APIs daunting, Keras offers a streamlined path to building sophisticated neural networks with fewer lines of code. It's ideal for getting started with deep learning.

- Apache Spark MLlib: Machine Learning on Big Data

For organizations dealing with truly massive datasets that exceed the memory capacity of a single machine, Apache Spark MLlib provides a scalable solution. As part of the Apache Spark ecosystem, MLlib offers a distributed machine learning library that can process data across clusters, making it suitable for big data analytics and large-scale parallel processing. It includes a range of algorithms for classification, regression, clustering, and collaborative filtering.

- Use Case Strengths: Machine learning on extremely large datasets, distributed computing, integrating ML with big data pipelines.

- Actionable Tip: When your data scales beyond what traditional single-machine frameworks can handle, exploring Spark MLlib is crucial. It enables you to leverage distributed computing power for your model training.

Matching Frameworks to Problem Types: A Strategic Approach

The true art of selecting the best machine learning frameworks lies in aligning their strengths with the specific demands of your project. Here, we delve into common problem types and recommend the optimal tools.

Computer Vision and Image Recognition

Computer vision tasks, such as image classification, object detection, and segmentation, are at the forefront of deep learning applications. They typically involve complex convolutional neural networks (CNNs).

- Recommended Frameworks: PyTorch, TensorFlow (often with Keras).

- Why: Both offer robust support for CNN architectures, extensive pre-trained models (e.g., ResNet, VGG, EfficientNet), and powerful GPU acceleration. PyTorch is favored for its flexibility in custom layer development and dynamic graph, while TensorFlow excels in deployment and scaling for production.

- Practical Advice:

- Leverage Transfer Learning: Start with pre-trained models on large datasets like ImageNet. Fine-tuning these models is often more effective and faster than training from scratch.

- Data Augmentation is Key: Augment your image dataset (rotations, flips, crops) to improve model generalization and prevent overfitting.

- Experiment with Architectures: Don't stick to one model. Try different CNN architectures and hyperparameter tuning strategies to find the best fit for your specific image data.

Natural Language Processing (NLP)

From sentiment analysis to machine translation and text generation, Natural Language Processing (NLP) relies heavily on sequential models like Recurrent Neural Networks (RNNs) and, more recently, Transformers.

- Recommended Frameworks: PyTorch, TensorFlow, and the Hugging Face Transformers library.

- Why: Both PyTorch and TensorFlow provide the necessary building blocks for complex NLP models. Hugging Face Transformers, built on top of both, has become the de facto standard for accessing and utilizing state-of-the-art pre-trained language models (e.g., BERT, GPT, T5), simplifying the application of powerful NLP techniques.

- Practical Advice:

- Start with Pre-trained Models: For most NLP tasks, fine-tuning a pre-trained transformer model (via Hugging Face) will yield superior results compared to building models from scratch.

- Tokenization Matters: Pay close attention to the tokenizer used with your pre-trained model; it's crucial for correct input formatting.

- Consider Domain-Specific Fine-tuning: If your text data is highly specialized, consider further pre-training a general model on your domain-specific corpus before fine-tuning for your task.

Tabular Data Analysis and Predictive Modeling

This category encompasses a vast range of problems, from customer churn prediction to financial forecasting, where data is typically structured in rows and columns.

- Recommended Frameworks: Scikit-learn, XGBoost, LightGBM.

- Why: For most tabular data, traditional machine learning models often outperform or match deep learning models while being faster to train and easier to interpret. Scikit-learn provides a comprehensive suite, while XGBoost and LightGBM offer highly optimized gradient boosting algorithms for top-tier performance.

- Practical Advice:

- Feature Engineering First: Invest significant time in creating meaningful features from your raw data. This often has a greater impact than choosing a complex model.

- Cross-Validation is Essential: Always use robust cross-validation techniques to get reliable estimates of your model's performance and prevent overfitting.

- Ensemble Methods: Combine predictions from multiple models (e.g., a Scikit-learn classifier with an XGBoost regressor) for improved robustness and accuracy.

Reinforcement Learning

Reinforcement learning involves training agents to make sequential decisions in an environment to maximize a reward signal. This is common in robotics, game AI, and autonomous systems.

- Recommended Frameworks: TensorFlow, PyTorch (often with libraries like Stable Baselines3 or Ray RLlib).

- Why: Both deep learning frameworks provide the necessary computational graph and automatic differentiation capabilities crucial for implementing complex RL algorithms like Deep Q-Networks (DQNs) or Proximal Policy Optimization (PPO). Dedicated RL libraries abstract away much of the complexity.

- Practical Advice:

- Define Your Environment Clearly: A well-defined environment with clear states, actions, and reward signals is fundamental to successful RL.

- Start Simple: Begin with simpler RL algorithms or environments before scaling up to more complex problems.

- Monitor Training Progress: Use tools like TensorBoard (for TensorFlow) or Weights & Biases to track rewards, losses, and other metrics during the often long and volatile RL training process.

Time Series Forecasting

Predicting future values based on historical time-stamped data, common in finance, weather, and demand forecasting.

- Recommended Frameworks: Scikit-learn (for classical methods), PyTorch or TensorFlow (for deep learning approaches like LSTMs or Transformers).

- Why: Scikit-learn offers traditional models like ARIMA and Exponential Smoothing via integrations (e.g., `pmdarima`), while deep learning frameworks are necessary for more complex neural network models that can capture intricate temporal patterns.

- Practical Advice:

- Handle Seasonality and Trends: Preprocessing your time series to account for seasonal patterns and trends often significantly improves forecasting accuracy.

- Lag Features: Create lagged versions of your target variable and other relevant features; these are crucial for time series models.

- Walk-Forward Validation: For time series, traditional cross-validation isn't suitable. Use walk-forward validation (training on past data, predicting future, then moving the window) for robust evaluation.

Big Data Machine Learning

When datasets are too large to fit into memory on a single machine, requiring distributed computing for training.

- Recommended Frameworks: Apache Spark MLlib, distributed TensorFlow/PyTorch.

- Why: Spark MLlib is purpose-built for distributed processing of large datasets. TensorFlow and PyTorch also offer distributed training capabilities, allowing models to be trained across multiple GPUs or machines.

- Practical Advice:

- Data Partitioning: Ensure your data is optimally partitioned across the cluster to minimize data shuffling.

- Resource Management: Configure your cluster resources (memory, CPU cores) appropriately for the size of your dataset and complexity of your model.

- Monitor Distributed Jobs: Use Spark UI or other cluster monitoring tools to track the progress and performance of your distributed machine learning jobs.

Beyond Frameworks: Best Practices for Success

While choosing the best machine learning frameworks is critical, successful project execution also depends on adopting sound data science practices and focusing on the broader development lifecycle. These considerations are vital for effective model deployment and achieving robust predictive analytics.

Choosing the Right Tool for the Job

The "best" framework is subjective and depends entirely on your project's context. Consider:

- Problem Complexity: Is it a simple classification on structured data or a complex generative model?

- Team Expertise: Does your team have more experience with PyTorch's dynamic graphs or TensorFlow's production-ready ecosystem?

- Ecosystem and Community Support: A vibrant community and extensive documentation can significantly accelerate development.

- Deployment Requirements: Will the model run on mobile, edge devices, or cloud servers?

Importance of Data Preprocessing

Regardless of the framework, the quality of your data and its preprocessing directly impacts model performance. This includes handling missing values, encoding categorical features, scaling numerical data, and feature engineering. A clean, well-prepared dataset is the foundation of any successful artificial intelligence project.

Model Evaluation and Hyperparameter Tuning

Once you've selected a framework and built a model, rigorous evaluation is paramount. Use appropriate metrics (accuracy, precision, recall, F1-score for classification; RMSE, MAE for regression) and robust validation strategies (e.g., k-fold cross-validation). Hyperparameter tuning, the process of optimizing model parameters that are set before training (e.g., learning rate, number of layers, regularization strength), is crucial for maximizing performance. Tools like Optuna or Keras Tuner can automate this process, making your model training more efficient.

Scalability and Deployment Considerations

Think beyond training. How will your model be served in a production environment? Considerations include latency, throughput, resource utilization, and monitoring. Frameworks like TensorFlow and PyTorch offer excellent tools for exporting models for deployment, while cloud platforms provide managed services for scalable serving.

Frequently Asked Questions

What is the main difference between TensorFlow and PyTorch?

The primary difference lies in their computation graph execution. TensorFlow traditionally uses a static graph, which is defined before execution and offers advantages for optimization and deployment. PyTorch uses a dynamic, "define-by-run" graph, making it more flexible, intuitive for debugging, and favored in research for its Pythonic feel. Both are powerful for deep learning and neural networks, but PyTorch often feels more agile for experimentation, while TensorFlow is often seen as more production-ready out-of-the-box for large-scale deployments.

Is Scikit-learn suitable for deep learning?

No, Scikit-learn is not designed for deep learning. It specializes in traditional machine learning algorithms for structured data, such as classification, regression, clustering, and dimensionality reduction. While it can handle some aspects of feature engineering for deep learning pipelines, it lacks the GPU acceleration and specialized layers (like convolutional or recurrent layers) required for training complex neural networks. For deep learning, you would use frameworks like TensorFlow or PyTorch.

Which machine learning framework is best for beginners?

For beginners, Scikit-learn is often the best starting point for understanding fundamental machine learning concepts due to its simple API and comprehensive set of traditional algorithms. For an introduction to deep learning, Keras (as a high-level API for TensorFlow or PyTorch) is highly recommended. Its intuitive, modular design allows beginners to build and train neural networks with minimal code, abstracting away much of the underlying complexity of lower-level frameworks.

Can I combine different machine learning frameworks in one project?

Yes, absolutely! It's a common practice to combine different machine learning frameworks within a single project to leverage their respective strengths. For instance, you might use Scikit-learn for initial data preprocessing and feature engineering, then train a complex deep learning model with PyTorch or TensorFlow, and finally deploy it using a framework-agnostic serving solution or one optimized for production. This modular approach allows you to pick the best machine learning frameworks for each specific task in your pipeline.

0 Komentar