How to Use Machine Learning for Detecting Fake News and Misinformation: A Comprehensive Guide

In an era inundated with digital information, the proliferation of fake news and misinformation poses a significant threat to societal discourse, public trust, and even democratic processes. As the volume and sophistication of deceptive content continue to escalate, traditional methods of fact-checking struggle to keep pace. This is where machine learning emerges as a pivotal tool, offering scalable and increasingly accurate solutions for identifying and combating disinformation at an unprecedented speed. Understanding how to harness the power of machine learning is no longer just an academic pursuit; it's a critical skill for anyone involved in digital content, media analysis, or information security.

The Escalating Challenge of Fake News and Misinformation

The digital age, while connecting the world, has inadvertently created fertile ground for the rapid spread of falsehoods. Misinformation, defined as false information spread regardless of intent to mislead, and disinformation, which is deliberately false and intended to deceive, erode public trust in institutions, media, and even science. From health hoaxes to political propaganda, the impact is pervasive. The sheer volume of content published daily on platforms like social media makes manual verification impossible. Human fact-checkers, though indispensable, are limited by time and resources. This necessitates automated, intelligent systems capable of processing vast datasets and identifying patterns indicative of untruthful content. The demand for robust hoax detection systems has never been higher, pushing the boundaries of what technology can achieve in the realm of information integrity.

The Role of Machine Learning in Combating Disinformation

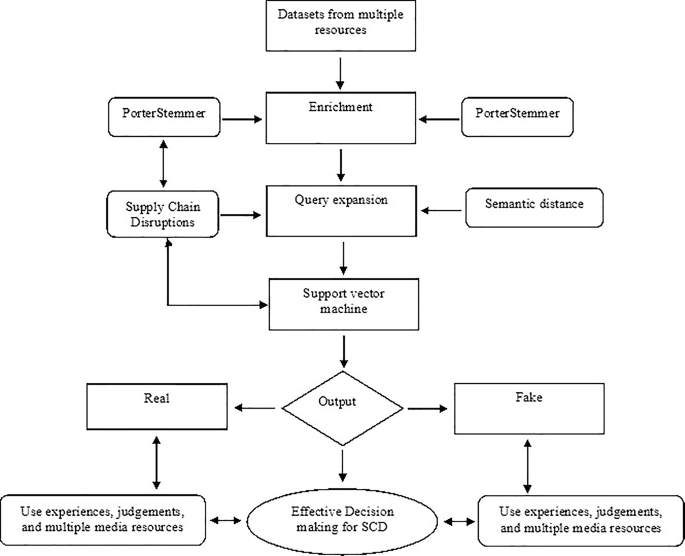

Machine learning, a subset of artificial intelligence, empowers computer systems to learn from data without explicit programming. For detecting fake news, this means training algorithms on massive datasets of verified and unverified content to recognize subtle cues, linguistic styles, and propagation patterns associated with false information. Instead of relying on a human to manually flag every suspicious article, ML models can automatically analyze text, images, videos, and even social network dynamics. This capability allows for proactive automated fact-checking and real-time intervention, significantly bolstering efforts in content verification and mitigating the spread of harmful narratives. The goal is to build intelligent systems that can augment human efforts, providing rapid preliminary assessments that allow human experts to focus on the most complex or impactful cases.

Core Machine Learning Approaches for Fake News Detection

Several distinct machine learning paradigms are employed in the fight against misinformation, each leveraging different aspects of the data:

Text-Based Analysis (Natural Language Processing)

The most common approach involves analyzing the textual content of news articles, social media posts, or headlines. This relies heavily on Natural Language Processing (NLP), a field of AI that enables computers to understand, interpret, and generate human language. Key techniques include:

- Feature Extraction: Converting raw text into numerical features that ML models can understand. This includes bag-of-words, TF-IDF (Term Frequency-Inverse Document Frequency) for word importance, n-grams (sequences of words), and more advanced word embeddings (like Word2Vec, GloVe, FastText) that capture semantic relationships between words.

- Linguistic Feature Analysis: Identifying specific writing styles, sentiment (positive, negative, neutral), emotional tone, use of sensational language, grammatical errors, or even rhetorical devices often found in sensationalized or misleading content. This contributes to a deeper semantic analysis.

- Source Credibility: Analyzing the reputation and history of the source publishing the content.

- Machine Learning Models:

- Traditional ML Algorithms: Naive Bayes, Support Vector Machines (SVMs), Logistic Regression, and Decision Trees are effective for simpler, feature-engineered datasets.

- Deep Learning Models: Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTMs), and especially Transformer models (like BERT, RoBERTa, GPT) have revolutionized text analysis. These deep learning models can understand context, nuances, and even sarcasm with remarkable accuracy, making them powerful for detecting sophisticated linguistic deception.

Social Network Analysis

Fake news often spreads differently than legitimate news, particularly on social media. Social media analysis focuses on how information propagates and the characteristics of the users involved:

- Propagation Patterns: Analyzing how quickly content spreads, who shares it, and the structure of the sharing network (e.g., rapid, coordinated bursts might indicate bot activity).

- User Behavior Analysis: Identifying suspicious user accounts (bots, trolls, coordinated inauthentic behavior) based on their posting frequency, followers, following patterns, and content.

- Network Structure: Using graph theory to analyze the connections between users and how information flows through the network. Graph Neural Networks (GNNs) are increasingly used here to model complex relationships.

- Temporal Features: Looking at the time series of posts, comments, and shares to detect unusual spikes or synchronized activity.

Multimedia Content Analysis

Misinformation isn't just text; it often includes manipulated images and videos. This area uses computer vision techniques:

- Image Forensics: Detecting signs of image manipulation, such as inconsistencies in lighting, pixel patterns, or compression artifacts.

- Deepfake Detection: Identifying synthetic media (deepfakes) created using generative AI models. This involves looking for subtle inconsistencies in facial movements, eye blinks, or audio synchronization that are difficult for current generative models to perfect. Convolutional Neural Networks (CNNs) are particularly effective for image and video analysis.

Hybrid Models and Ensemble Learning

The most robust solutions often combine multiple approaches. Hybrid models integrate text, social network, and multimedia features, while ensemble learning techniques combine the predictions of several individual models to achieve higher accuracy and reduce bias. For example, a system might use an NLP model to analyze the text, a social network model to analyze its spread, and a computer vision model to verify any associated images, then combine these insights for a final verdict.

Essential Steps to Implement ML for Fake News Detection

Building an effective machine learning system for fake news detection involves several critical phases:

Data Collection and Preprocessing

- Curating Labeled Datasets: This is arguably the most crucial step. You need large, diverse datasets of both real and fake news, meticulously labeled by human fact-checkers. Public datasets like LIAR, FakeNewsNet, or those from reputable fact-checking organizations are invaluable starting points. The quality and representativeness of your data directly impact model performance.

- Data Cleaning: Removing noise, irrelevant information, and inconsistencies. This includes handling missing values, correcting typos, and standardizing formats.

- Tokenization and Normalization: Breaking text into individual words or sub-word units (tokens) and normalizing them (e.g., converting to lowercase, stemming/lemmatization).

- Feature Engineering: Creating relevant features from the raw data. For text, this could involve TF-IDF scores, part-of-speech tags, readability scores, or the presence of specific keywords. For social media, features might include follower counts, tweet frequency, or network centrality. Effective feature engineering can significantly boost model accuracy.

Model Selection and Training

- Choosing the Right Algorithm: Based on your data type and problem complexity, select appropriate ML or deep learning models. For text classification, BERT-based models are often a strong choice, while for network analysis, GNNs might be preferred.

- Splitting Data: Divide your labeled dataset into training, validation, and test sets. The training set is used to teach the model, the validation set to fine-tune hyperparameters, and the test set to evaluate its final, unbiased performance.

- Training the Model: Feeding the preprocessed data to the chosen algorithm. This iterative process adjusts the model's internal parameters to minimize prediction errors.

- Hyperparameter Tuning: Optimizing the model's performance by adjusting parameters that are not learned from the data (e.g., learning rate, number of layers in a neural network).

Evaluation Metrics and Performance Assessment

Once trained, the model's performance must be rigorously evaluated using metrics relevant to classification tasks:

- Accuracy: The proportion of correctly classified instances. While intuitive, it can be misleading with imbalanced datasets (e.g., far more real news than fake news).

- Precision: Of all items the model predicted as fake, how many were actually fake? (Minimizes false positives).

- Recall (Sensitivity): Of all actual fake news items, how many did the model correctly identify? (Minimizes false negatives).

- F1-Score: The harmonic mean of precision and recall, providing a balanced measure.

- AUC-ROC (Area Under the Receiver Operating Characteristic Curve): A robust metric for imbalanced datasets, indicating the model's ability to distinguish between classes.

- Addressing Imbalanced Datasets: Fake news instances are typically less frequent than real news. Techniques like oversampling the minority class, undersampling the majority class, or using specialized algorithms (e.g., SMOTE) are crucial.

Deployment and Continuous Monitoring

- Integration: Deploying the trained model into a real-world system, such as a content moderation platform, a browser extension, or an API.

- Real-time Detection: Designing the system to process new content as it emerges, providing near real-time alerts.

- Continuous Monitoring and Retraining: Misinformation tactics evolve. Models must be continuously monitored for performance degradation (model drift) and retrained with new, labeled data to stay effective against emerging threats and new forms of disinformation analysis.

Overcoming Challenges in ML-Powered Fake News Detection

While powerful, machine learning for fake news detection is not without its hurdles:

The Evolving Nature of Misinformation

Fake news creators are constantly adapting, using new linguistic styles, platforms, and sophisticated techniques (e.g., subtle manipulations, highly contextual satire). This creates an arms race where ML models must continuously evolve. Adversarial machine learning explores how models can be fooled, highlighting the need for robust, generalizable solutions.

Data Scarcity and Bias

High-quality, large-scale labeled datasets are expensive and time-consuming to create. Furthermore, datasets can contain inherent biases (e.g., reflecting the biases of the fact-checkers or the sources collected), leading to algorithmic bias in the model's predictions. Ensuring data diversity and fairness is paramount to avoid perpetuating societal inequalities or misclassifying legitimate content.

Contextual Nuance and Sarcasm

Machine learning models often struggle with understanding human nuances like satire, irony, sarcasm, or highly specific cultural contexts. A statement that is factual in one context might be misleading in another. This makes accurate credibility assessment challenging, as it requires a deeper understanding than just surface-level linguistic patterns.

Explainability and Transparency

Many advanced deep learning models are "black boxes," meaning it's difficult to understand why they made a particular prediction. For sensitive applications like fake news detection, where trust and accountability are crucial, understanding the model's reasoning (explainable AI) is vital. Users and decision-makers need to trust the system, and transparency helps build that trust.

The Future of AI in Combating Disinformation

The landscape of fake news detection is rapidly evolving. The rise of advanced generative AI models (like GPT-4, Midjourney) means that creating highly convincing fake text, images, and videos is becoming easier and more accessible. This necessitates a new generation of detection models that can counter these sophisticated threats. Future directions include:

- Proactive Detection: Moving beyond reactive detection to anticipate and identify emerging misinformation campaigns before they go viral.

- Multi-modal Fusion: Even more sophisticated integration of text, image, video, and audio analysis for holistic content verification.

- Human-AI Collaboration: Systems that act as intelligent assistants to human fact-checkers, flagging suspicious content and providing initial analyses, but leaving the final judgment to human experts who can account for complex context and nuance. This emphasizes the importance of human oversight.

- Ethical AI Development: Ensuring that the development and deployment of these systems adhere to strong ethical guidelines, respecting privacy, avoiding bias, and promoting transparency. This contributes to building systems that are considered trustworthy sources of information.

- Information Literacy Integration: Beyond just detection, ML systems can potentially be integrated into educational tools to improve public information literacy, teaching users how to critically evaluate information themselves.

Frequently Asked Questions

What is the primary challenge in using ML for fake news detection?

The primary challenge lies in the constantly evolving nature of misinformation and the difficulty in obtaining large, diverse, and unbiased labeled datasets. Fake news creators continually adapt their tactics, requiring detection models to be continuously updated and retrained. Additionally, discerning sarcasm, satire, and deep contextual nuances remains a significant hurdle for current ML models, impacting accurate credibility assessment.

Can ML models detect deepfakes effectively?

Yes, machine learning, particularly deep learning models like Convolutional Neural Networks (CNNs), are highly effective at detecting deepfakes. These models are trained to identify subtle inconsistencies in facial movements, lighting, audio synchronization, and pixel patterns that are indicative of synthetic media. However, as deepfake generation technology improves, detection methods must also continuously advance to keep pace.

How important is data quality in training these models?

Data quality is absolutely critical. The performance of any machine learning model is directly dependent on the quality, quantity, and representativeness of its training data. Poorly labeled, biased, or insufficient data will lead to models that perform poorly, generalize badly to new information, and may even perpetuate harmful algorithmic bias. Investing in rigorous data preprocessing and diverse dataset curation is fundamental for robust fake news detection.

What role does human oversight play in ML-driven fake news systems?

Human oversight is indispensable. While ML models can process vast amounts of data and identify patterns at scale, they lack human intuition, contextual understanding, and the ability to interpret complex nuances like satire or cultural references. Humans are crucial for training data labeling, validating model predictions, handling edge cases, interpreting ambiguous results, and adapting systems to new forms of misinformation. ML systems are best seen as powerful tools that augment, rather than replace, human fact-checking and content moderation efforts.

0 Komentar