Complete Guide

The burgeoning field of quantum computing promises to revolutionize industries from medicine to finance, but how do we accurately measure its progress? Understanding quantum computing benchmark performance comparison is not merely an academic exercise; it is the bedrock upon which the entire industry’s advancement rests. This comprehensive guide delves into the intricate world of evaluating quantum processors, exploring the diverse metrics, methodologies, and challenges involved in assessing their true computational power. As we stand at the precipice of a quantum future, a robust framework for comparing quantum systems is paramount for both researchers and potential users seeking to harness the immense potential of this transformative technology. Discover how leading institutions and companies are striving to establish standardized benchmarks that provide clarity amidst the rapid evolution of quantum hardware capabilities.

The Imperative of Benchmarking Quantum Systems

In the realm of classical computing, performance evaluation is relatively straightforward, relying on metrics like clock speed, RAM, and FLOPS. However, the unique principles governing quantum mechanics render these traditional benchmarks largely irrelevant for quantum computers. A quantum system's power isn't just about the number of qubits; it's about their quality, connectivity, and the ability to maintain coherence. Without a clear framework for quantum computing benchmark performance comparison, potential users struggle to identify the most suitable hardware for their specific applications, and developers lack a common standard to gauge their progress. This need has spurred intense research into creating meaningful metrics that capture the multifaceted nature of quantum computation, moving beyond simple qubit counts to more holistic indicators of computational utility.

Why Traditional Metrics Fall Short

- Qubit Count Alone is Insufficient: While a higher number of qubits is generally desirable, it doesn't tell the whole story. Low-quality qubits with high error rates or short coherence times are less useful than fewer, high-fidelity ones.

- Classical Speed Metrics are Irrelevant: Quantum operations don't run on clock cycles in the same way classical processors do. Instead, the speed is often limited by qubit coherence and gate operation times.

- Complex Interactions: Quantum systems leverage entanglement and superposition, phenomena that have no direct classical analogues, making direct classical comparisons misleading.

Key Metrics for Quantum Performance Evaluation

To truly understand quantum computing benchmark performance comparison, one must look beyond superficial specifications. The quantum community has developed several sophisticated metrics, each attempting to capture different facets of a quantum computer's capabilities. These metrics are crucial for both hardware developers to track their progress and for users to make informed decisions about which platform best suits their needs.

Qubit Quality and Quantity

- Qubit Count: The raw number of qubits available. While not the sole indicator, it defines the potential scale of the problems a quantum computer can theoretically address.

- Qubit Coherence Time (T1 and T2): This measures how long a qubit can maintain its quantum state before decohering due to environmental noise. Longer coherence times allow for more complex and longer-running quantum algorithms. T1 refers to the energy relaxation time, while T2 is the dephasing time, often the more limiting factor.

- Gate Fidelity/Error Rates: The accuracy with which quantum gates (the fundamental operations on qubits) are executed. High gate fidelity is paramount, as errors accumulate rapidly in quantum circuits, severely impacting computational accuracy. Typical error rates are expressed as a percentage, with lower being better.

Integrated Performance Metrics

- Quantum Volume (QV): Introduced by IBM, Quantum Volume is a single-number metric designed to capture a quantum computer's overall computational power. It considers the number of qubits, their connectivity, and their coherence and gate fidelities. A higher Quantum Volume indicates a more capable quantum computer, able to run more complex quantum circuits. It effectively measures the largest random circuit of a given depth and width that the quantum computer can successfully execute.

- Circuit Depth: This metric refers to the number of sequential quantum gate operations that can be performed before errors accumulate to an unacceptable level. A deeper circuit depth allows for more complex algorithms to be executed, a critical factor for achieving quantum advantage.

- Application-Specific Benchmarks: While generic metrics like QV are useful, the ultimate test of a quantum computer's performance lies in its ability to solve specific, real-world problems. These benchmarks involve running actual quantum algorithms designed for tasks like drug discovery, materials science, or financial modeling. This approach often reveals practical limitations and strengths that generic benchmarks might miss.

- Quantum Advantage/Supremacy: The holy grail of quantum computing, this refers to the point where a quantum computer can perform a computational task that no classical supercomputer can perform in a reasonable amount of time. While specific instances of "quantum supremacy" have been demonstrated (e.g., Google's Sycamore processor), achieving practical quantum advantage for useful problems remains a key goal.

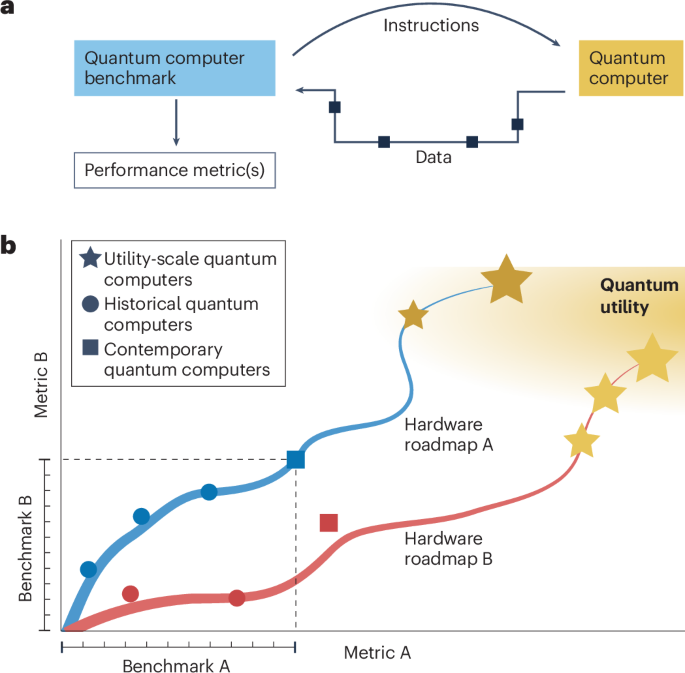

Benchmarking Methodologies and Frameworks

The process of conducting a quantum computing benchmark performance comparison involves rigorous methodologies and often utilizes specialized software frameworks. These frameworks aim to standardize the testing process, allowing for more reliable and reproducible comparisons across different hardware platforms and architectural designs.

Standardized Benchmarking Suites

Leading quantum software development kits (SDKs) often include benchmarking tools and libraries:

- Qiskit Runtime (IBM): Offers capabilities to run complex quantum circuits efficiently and provides access to IBM's quantum processors, allowing researchers to perform benchmark tests directly on real hardware. This ecosystem facilitates the measurement of Quantum Volume and other performance indicators.

- Cirq (Google): Google's open-source framework for creating, editing, and invoking quantum circuits. It supports benchmarking on Google's Sycamore processor and other quantum simulators, enabling detailed analysis of gate performance and error rates.

- OpenQASM/QIR: These are intermediate representations for quantum programs, aiming to provide a common language for describing quantum circuits, which can then be compiled and run on various quantum backends. This standardization is crucial for developing universal benchmarks.

Cross-Platform Comparisons: Complexities and Insights

Comparing different quantum hardware architectures (e.g., superconducting qubits vs. trapped ions) is inherently challenging due to their distinct strengths and weaknesses. Superconducting qubits often boast higher qubit counts but can have complex wiring and cooling requirements. Trapped-ion systems, conversely, typically offer higher gate fidelities and all-to-all connectivity but may be slower or have fewer qubits. Benchmarking efforts must account for these architectural differences, often by running the same quantum circuits or algorithms on each platform and analyzing the outcomes, fidelity, and execution times. This allows for a deeper understanding of where each quantum processor excels or falls short.

The Role of Classical Simulation

Before deploying an algorithm on actual quantum hardware, it's often simulated on classical supercomputers. This classical simulation serves a dual purpose: it helps in verifying the correctness of quantum algorithms and provides a baseline for performance. When a quantum computer can outperform its classical counterpart for a specific task, it signifies a step towards practical quantum advantage. However, the computational resources required for classical simulation grow exponentially with qubit count and circuit depth, making it impossible to simulate truly large quantum systems.

Leading Quantum Hardware Architectures and Their Benchmarks

Different quantum hardware companies employ distinct approaches, each with unique performance characteristics that become evident through quantum computing benchmark performance comparison.

Superconducting Qubits (IBM, Google, Rigetti)

- Characteristics: These systems operate at extremely low temperatures (millikelvin range) and use superconducting circuits as qubits. They are known for scalability in terms of qubit numbers.

- Benchmarking Focus: IBM has heavily promoted Quantum Volume, with their Eagle and Osprey processors achieving significant QV numbers. Google's Sycamore processor famously demonstrated quantum supremacy on a specific sampling task. Rigetti also focuses on increasing qubit count and improving gate fidelity.

- Challenges: Maintaining coherence at scale, complex cryogenics, and crosstalk between qubits.

Trapped-Ion Qubits (IonQ, Quantinuum)

- Characteristics: Ions are suspended in electromagnetic fields and manipulated with lasers. They typically boast high gate fidelities and all-to-all connectivity (any qubit can interact directly with any other).

- Benchmarking Focus: Quantinuum (a Honeywell spin-off) uses a metric called "Quantum Volume" but often refers to it as "System Model H1" or "H2" with specific performance numbers, emphasizing their high fidelity and connectivity. IonQ also highlights high gate fidelities and impressive algorithmic qubit counts (a measure of effective qubits based on performance).

- Strengths: Excellent coherence times, high gate fidelity, good connectivity.

Neutral Atoms (Pasqal, ColdQuanta)

- Characteristics: Utilizes arrays of neutral atoms trapped by optical tweezers. This architecture offers unique scaling potential and reconfigurable qubit arrangements.

- Benchmarking Focus: Still an emerging field, benchmarks often focus on the number of controllable qubits, their arrangement flexibility, and initial gate fidelities. They show promise for large-scale entangled states.

Photonic Quantum Computing (PsiQuantum, Xanadu)

- Characteristics: Employs photons (light particles) as qubits. This approach offers advantages in terms of room-temperature operation and compatibility with existing fiber optic infrastructure.

- Benchmarking Focus: Benchmarks typically revolve around photon generation efficiency, gate fidelity (often probabilistic), and the ability to scale up entanglement generation. PsiQuantum aims for fault-tolerant quantum computing through massive photonics integration.

Challenges and Nuances in Quantum Benchmarking

Despite significant progress, the field of quantum computing benchmark performance comparison is fraught with challenges, making definitive statements about "the best" quantum computer difficult.

Noise and Error Mitigation

Quantum systems are inherently noisy. Environmental interactions lead to decoherence and errors during gate operations. While error correction is a long-term goal, current "Noisy Intermediate-Scale Quantum" (NISQ) devices rely heavily on error mitigation techniques to improve results. Benchmarks must account for the effectiveness of these mitigation strategies, as they can significantly impact the apparent performance.

Scalability vs. Performance

There's often a trade-off between the number of qubits and their individual quality. A system with many qubits but poor coherence or high error rates might be less useful than one with fewer, higher-quality qubits. Benchmarks need to balance these factors to provide a holistic view of a system's true utility.

Reproducibility of Results

Due to the probabilistic nature of quantum mechanics and the sensitivity of quantum hardware to environmental factors, reproducing exact benchmark results can be challenging. Researchers strive for statistical significance and clear reporting of experimental conditions to ensure results are reliable.

The Moving Target Problem

The field of quantum computing is evolving at an unprecedented pace. What constitutes a leading benchmark today might be surpassed in a matter of months. This rapid advancement means that any quantum computing benchmark performance comparison is a snapshot in time, requiring continuous re-evaluation and adaptation of metrics.

Actionable Insights for Evaluating Quantum Systems

For those looking to engage with quantum computing, understanding how to interpret benchmarks is critical. Here are some practical tips:

- Look Beyond Qubit Count: While a high qubit count is appealing, prioritize metrics like Quantum Volume, gate fidelity, and coherence times. These indicators provide a much more realistic picture of a quantum computer's current utility.

- Consider Your Application: Different quantum architectures excel at different types of problems. For instance, trapped ions might be better for certain types of quantum simulations due to their high connectivity, while superconducting qubits might be preferred for their potential scalability. Match the hardware's strengths to your specific problem domain.

- Examine Published Research: Reputable academic papers and industry reports often provide detailed benchmark results and methodologies. These peer-reviewed sources offer deeper insights than marketing claims. Look for results that include confidence intervals and detailed experimental setups.

- Understand Error Mitigation: In the NISQ era, error mitigation is key. Investigate what error mitigation techniques are employed by a particular platform and how effective they are. This directly impacts the reliability of your computational results.

- Stay Updated: The quantum landscape is dynamic. Follow leading research groups, companies, and industry news to stay abreast of the latest advancements in quantum processor capabilities and benchmarking standards.

- Experiment with Quantum Cloud Platforms: Many quantum hardware providers offer cloud access. Utilize these platforms to run small-scale experiments and directly experience the performance characteristics of different systems. This hands-on experience is invaluable. For more on quantum algorithm design, explore our comprehensive guide.

Future Trends in Quantum Benchmarking

The evolution of quantum computing benchmark performance comparison is ongoing. Future trends are likely to focus on more application-centric metrics and the development of truly universal benchmarks that transcend architectural differences.

- Application-Centric Benchmarks: As quantum computers become more capable, the focus will shift from abstract metrics to how well they solve specific, commercially relevant problems. This will involve benchmarking the performance of entire quantum algorithms rather than just individual gates or small circuits.

- Standardization Efforts: International bodies and industry consortiums are working towards establishing universally accepted benchmarking standards. This will make it easier to compare systems from different vendors on an equal footing.

- Benchmarking Quantum Software: Beyond hardware, the performance of quantum software, compilers, and optimization techniques will also become a critical area for benchmarking. Efficient software can significantly enhance the effective performance of even modest quantum hardware.

Frequently Asked Questions

What is Quantum Volume and why is it important for quantum computing benchmark performance comparison?

Quantum Volume (QV) is an integrated benchmark metric introduced by IBM that quantifies the overall computational capability of a quantum computer. It considers not just the number of qubits, but also their connectivity, coherence times, and gate fidelities. A higher QV indicates a more powerful quantum computer, capable of executing more complex quantum circuits. It's important because it provides a single, holistic number that attempts to summarize multiple critical hardware performance aspects, making it a valuable tool for quantum computing benchmark performance comparison beyond simple qubit counts.

Why are quantum computing benchmarks difficult compared to classical computing benchmarks?

Quantum computing benchmarks are inherently more difficult due to the unique principles of quantum mechanics. Unlike classical bits, qubits are fragile and susceptible to noise, leading to decoherence and errors. Metrics like clock speed and RAM are irrelevant. Instead, benchmarks must account for factors like qubit coherence time, gate fidelity, and entanglement capabilities, which are complex to measure and vary significantly between different quantum hardware architectures. The probabilistic nature of quantum measurements also adds complexity, requiring statistical analysis and careful experimental design to ensure reproducible and meaningful results in quantum computing benchmark performance comparison.

How do different quantum architectures compare in terms of their benchmark performance?

Different quantum architectures, such as superconducting qubits (IBM, Google), trapped ions (IonQ, Quantinuum), and neutral atoms (Pasqal), each have distinct strengths and weaknesses reflected in their benchmark performance. Superconducting qubits often lead in raw qubit count and have demonstrated high Quantum Volume, while trapped-ion systems typically boast superior gate fidelities and all-to-all connectivity, making them excellent for certain algorithms. Neutral atoms are emerging with promising scalability. Direct quantum computing benchmark performance comparison is challenging due to these architectural differences, often requiring tailored benchmarks or application-specific tests to highlight their respective advantages for different problem sets. There is no single "best" architecture; the optimal choice depends on the specific computational task and the current stage of technological development.

What does "quantum advantage" mean in the context of quantum computing benchmark performance comparison?

Quantum advantage (sometimes referred to as quantum supremacy) is a key concept in quantum computing benchmark performance comparison. It refers to the point where a quantum computer can perform a specific computational task significantly faster or more efficiently than any classical supercomputer. This doesn't necessarily mean the quantum computer can solve every problem better, but rather that it can tackle a particular problem that is practically impossible for even the most powerful classical machines within a reasonable timeframe. Achieving quantum advantage is a major milestone, demonstrating that quantum computers are moving beyond theoretical curiosities to devices with real computational power, even if the initial demonstrations are for highly specialized, non-practical problems.

0 Komentar